Performing accurate time synchronization between two devices#

You can use lsl to synchronize two Pupil Labs Companion Devices.

Description of the Choreography#

We placed two Pupil Invisible Glasses in front of a light source, the eye cameras facing towards the light. The scene cameras were detached from the glasses and the light source was switched off during setup. The Companion Devices were set up such that both would upload the recorded data to the same Workspace.

An lsl relay was started for each of the Pupil Invisible Glasses, and the two lsl Event streams were recorded with the LabRecorder. We did not record the lsl Gaze streams for this example.

After the lsl streams were running and being recording by the LabRecorder, we started recording in the Pupil Invisible Companion App. After this step, the following data series were being recorded:

Event data from each of the two Pupil Invisible Glasses (two data streams) are streamed and recorded via lsl and the LabRecorder.

Event data from each pair of Pupil Invisible Glasses is saved locally on the Companion Device during the recording and uploaded to cloud once the recording completed.

Eye Camera Images from each pair of Pupil Invisible Glasses are saved locally on the Companion Device and uploaded to Pupil Cloud when the recording completed. The 200 Hz Gaze position estimate is computed in Pupil Cloud.

With this setup in place and all recordings running, we switched the light source pointing at the eye cameras on and off four times. This created a simultaneous signal recorded by the Eye Cameras (brightness increases).

After that, we stopped all recordings in the inverse order (first in the companion app, then in the LabRecorder) and stopped the lsl relay.

Comparing NTP and LSL time sync#

We used the Start the post-hoc time alignment tool to calculate the time alignments between the two Companion devices and the computer running the LSL relay.

Time Alignment Parameters - Subject 1

1{

2 "cloud_to_lsl": {

3 "intercept": -1666236720.9472754,

4 "slope": 0.9999924730866947

5 },

6 "lsl_to_cloud": {

7 "intercept": 1666249262.6610205,

8 "slope": 1.0000075269698494

9 },

10 "info": {

11 "model_type": "LinearRegression",

12 "version": 1

13 }

14}

Time Alignment Parameters - Subject 2

1{

2 "cloud_to_lsl": {

3 "intercept": -1666236286.8392515,

4 "slope": 0.9999922119433565

5 },

6 "lsl_to_cloud": {

7 "intercept": 1666249263.6828992,

8 "slope": 1.0000077881172447

9 },

10 "info": {

11 "model_type": "LinearRegression",

12 "version": 1

13 }

14}

Then we extracted the illuminance for each eye video frame

Illuminance Extraction Script

1from pathlib import Path

2from typing import Collection

3

4import av

5import click

6import numpy as np

7import pandas as pd

8from rich import print

9from rich.progress import track

10from rich.traceback import install as install_rich_traceback

11

12

13@click.command()

14@click.argument(

15 "eye_videos",

16 type=click.Path(exists=True, readable=True, dir_okay=False, path_type=Path),

17 nargs=-1,

18)

19def main(eye_videos: Collection[Path]):

20 if not eye_videos:

21 raise ValueError(

22 "Please pass at least one path to an `PI right/left v1 ps*.mp4/mjpeg` file."

23 )

24 for path in eye_videos:

25 extract_illuminance(path)

26

27

28def extract_illuminance(eye_video_path: Path):

29 print(f"Processing {eye_video_path}...")

30 time = _load_time(eye_video_path.with_suffix(".time"))

31 illuminance = _decode_illuminance(eye_video_path, num_expected_frames=time.shape[0])

32

33 min_len = min(time.shape[0], illuminance.shape[0])

34 time = time.iloc[:min_len]

35 illuminance = illuminance.iloc[:min_len]

36

37 output_path = eye_video_path.with_suffix(".illuminance.csv")

38 print(f"Writing result to {output_path}")

39 pd.concat([illuminance, time], axis="columns").to_csv(output_path, index=False)

40

41

42def _load_time(time_path: Path) -> "pd.Series[int]":

43 return pd.Series(np.fromfile(time_path, dtype="<u8"), name="timestamp [ns]")

44

45

46def _decode_illuminance(video_path: Path, num_expected_frames: int) -> "pd.Series[int]":

47 video_format = video_path.suffix[1:]

48 with av.open(str(video_path), format=video_format) as container:

49 container.streams.video[0].thread_type = "AUTO"

50 illuminance = [

51 np.array(frame.planes[0], dtype=np.uint8).sum()

52 for frame in track(

53 container.decode(video=0),

54 description="Decoding illuminance",

55 total=num_expected_frames,

56 )

57 ]

58 return pd.Series(illuminance, name="illuminance")

59

60

61if __name__ == "__main__":

62 install_rich_traceback()

63 main()

Extracted Illuminance - Excerpt Subject 1 - Left Eye Video

1illuminance,timestamp [ns]

21930703,1666344684449078006

31931568,1666344684457110006

41937257,1666344684461092006

51930975,1666344684465084006

61931744,1666344684469134006

71935741,1666344684477145006

81931907,1666344684481116006

91931363,1666344684485168006

101935227,1666344684489040006

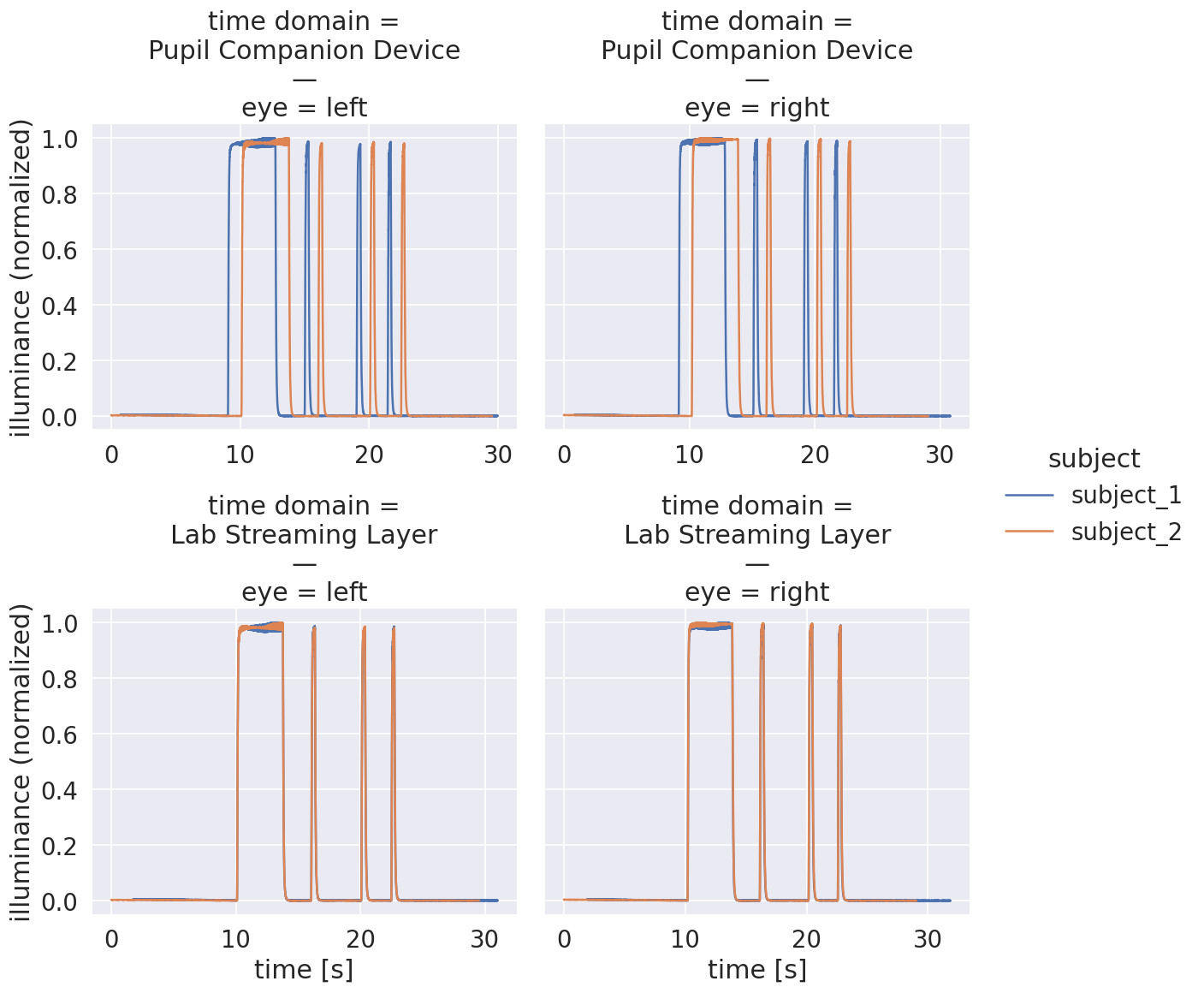

With the script below, we load the time alignment configurations, apply them on the extracted illuminance timestamps, and plot the illuminance for both recordings over original and aligned timestamps.

Script to Apply and Plot Time Alignment

1from itertools import chain

2from pathlib import Path

3

4import pandas as pd

5import seaborn as sns

6from rich import print

7from rich.traceback import install

8

9from pupil_labs.lsl_relay.linear_time_model import TimeAlignmentModels

10

11time_domain_key = "Pupil Companion Device"

12

13install()

14sns.set(font_scale=1.5)

15

16illuminance_files = sorted(

17 Path("./companion_app_exports").glob("subject_*/*.illuminance.csv")

18)

19print(f"Illuminance files: {sorted(map(str, illuminance_files))}")

20companion_device_dfs = {f: pd.read_csv(f) for f in illuminance_files}

21

22for path, df in companion_device_dfs.items():

23 df["path"] = path

24 df["time"] = pd.to_datetime(df["timestamp [ns]"], unit="ns")

25 df["time [s]"] = df["timestamp [ns]"] * 1e-9

26 df["subject"] = path.parent.name

27 df["eye"] = path.stem.split(" ")[1]

28

29 ill_min = df.illuminance.min()

30 ill_max = df.illuminance.max()

31 df["illuminance (normalized)"] = (df.illuminance - ill_min) / (ill_max - ill_min)

32

33 df["time domain"] = time_domain_key

34

35lsl_time_dfs = {p: df.copy() for p, df in companion_device_dfs.items()}

36for path, df in lsl_time_dfs.items():

37 assert (df.path == path).all()

38 model_path = path.with_name("time_alignment_parameters.json")

39 models = TimeAlignmentModels.read_json(model_path)

40 df["time [s]"] = df["timestamp [ns]"] * 1e-9

41

42 df["time [s]"] = models.cloud_to_lsl.predict(df[["time [s]"]].values)

43 df["time domain"] = "Lab Streaming Layer"

44

45illuminance_df = pd.concat(

46 chain(companion_device_dfs.values(), lsl_time_dfs.values()), ignore_index=True

47)

48

49

50for time_domain in (time_domain_key, "Lab Streaming Layer"):

51 for eye in ("left", "right"):

52 mask = (illuminance_df["time domain"] == time_domain) & (

53 illuminance_df.eye == eye

54 )

55 illuminance_df.loc[mask, "time [s]"] -= illuminance_df.loc[

56 mask, "time [s]"

57 ].min()

58

59fg = sns.relplot(

60 kind="line",

61 data=illuminance_df,

62 x="time [s]",

63 y="illuminance (normalized)",

64 col="eye",

65 hue="subject",

66 row="time domain",

67 aspect=1,

68 facet_kws=dict(sharex=False),

69)

70fg.set_titles(template="{row_var} =\n{row_name}\n—\n{col_var} = {col_name}")

71fg.tight_layout()

72

73output_path = Path("illuminance_over_time.png").resolve()

74print(f"Saving figure to {output_path}")

75fg.savefig(output_path, dpi=120)

The resulting plot demonstrates the improved time sync when using the LSL-aligned timestamps.